Have you heard about the latest artificial intelligence (AI) risk? It’s not a wild-eyed fantasy about killer robots and controlling machines. This concern is firmly rooted in the real world: are unrealistic fears about AI technologies leading to prohibitive policies that quash innovation?

The unfounded fear of AI is great, widespread, and not limited to the technophobes among us. In fact, some of the brightest minds in technology like Elon Musk and Stephen Hawking — hardly Luddites! — have fuelled the flames of a growing technopanic about AI.

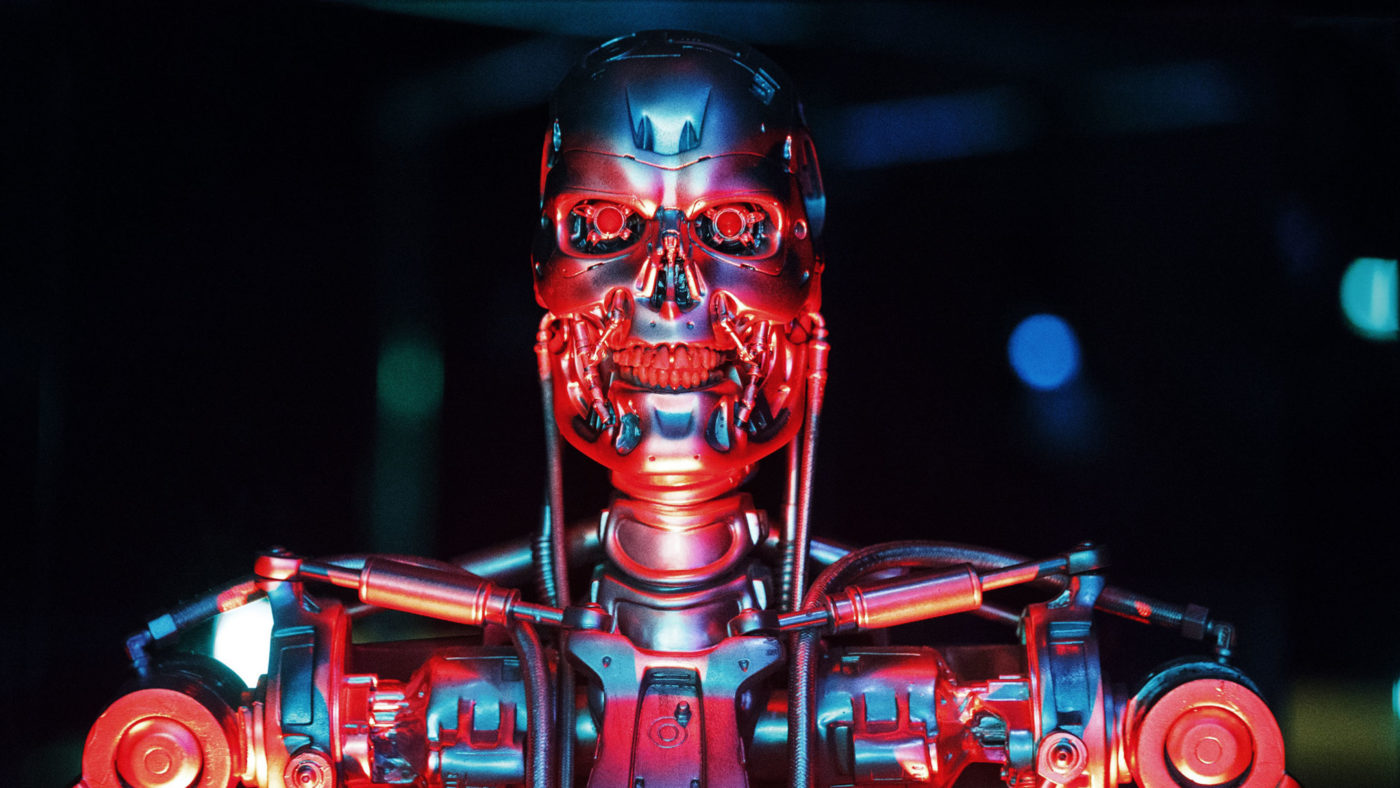

Musk has compared AI to an evil demon and called on the National Governor’s Association to exorcise it with regulations as soon as possible. Hawking, channeling popular dystopian movies like the Terminator series, predicted that AI could “spell the end of the human race.”

Sounds like some pretty scary stuff. And when society’s latent scepticism of change is backed by the public worries of such well-respected figures, many of us are inclined to want to just say no to AI. But policy needs to be more grounded in science – not science fiction.

We recently published a new study for the Mercatus Center at George Mason University explaining exactly what is on the line with AI policy. If we let our unfounded fears of worst-case scenarios prohibitively clamp down on a new technology, we will never see the best-case outcomes that can enrich our welfare and even save lives.

Consider the question of safety. Murderous AIs may get the Hollywood roles and the headlines, but did you know that doctors have been using AI-assisted technologies to diagnose illnesses, perform surgeries, and monitor health outcomes for years?

Indeed, the health care industry has been a leading investor in AI technologies, signalling the immense benefits they could bring to our well-being. Many people are likely already better off – or even alive – because of these advances in health technology.

Or think about manufacturing. Much ink has been spilled over how AI technologies will destroy work as we know it. Yet these same criticisms were launched against the weaving machine in the 19th century, and we’ve hardly lived in a jobless dystopia since then.

Rather, labour-saving technologies lower the cost of goods while creating opportunities for employment in new, thus far unconsidered, industries.

Market research suggests that AI technologies can greatly improve the efficiencies of modern manufacturing methods, better minimise costs, and deliver the right products to the right markets. And while labour market disruptions are never comfortable, displaced workers generally find new, better jobs in a reasonable amount of time. In the meantime, society gets to enjoy a higher level of production, greater choice, and a better quality of life.

Driverless cars could be the most significant AI application on the horizon. Over 90 people die in auto accidents every day in the US – over 35,000 per year – and 94 per cent of those accidents are attributable to human error behind the wheel (i.e., distractions, drowsiness, and drunk driving). “Intelligent” cars could help reverse this horrendous death toll and help society achieve the greatest public health success story of our lifetime. This is why public policy towards AI matters so much. The longer the delay in implementing driverless car technology, the greater the cost to human life.

Interestingly, many of the new fears surrounding AI technologies have nothing to do with evil computers or jobless futures at all. Rather, people are concerned that algorithms and machine learning programs may further social inequality and prejudice. For example, search results for names associated with a particular ethnicity may imply disproportionate criminality on behalf of that group. Even worse, the “predictive policing” software employed by law enforcement may lead police to over-detain people of a certain ethnic group.

But such concerns are more appropriately addressed through the courts and public pressure – not through prohibitive regulation.

In general, we advocate an embrace of “permissionless innovation” to the greatest extent possible for all AI technologies. This approach calls for humility, patience, and active collaboration between policymakers and innovators to responsibly address social concerns as they arise, rather than simply banning or limiting them outright from the get-go.

So what should replace the prohibitive, “precautionary” regulation of the past? A combination of multi-stakeholder governance, “soft law” remedies, and proactive education can best address many of the concerns addressed above. Much of the internet is actually governed in this manner today. It was government’s deliberate hands-off approach to the early web that is responsible for the wide range of innovations we enjoy today.

The benefits of this approach are considerable. This policy stance allows for the greatest range of discovery and production while keeping policymakers informed and active in developing smart solutions.

As we conclude in our study, the benefits of AI technologies are simply too great for us to allow them to be extinguished by poorly considered policy. We have now entered the age of smart machines. Isn’t it time that our regulations are smart enough to keep up?