The European Union’s idea of technological progress seems a bit out of date.

In late February, the European Commission released its draft strategy to advance data and AI. Its support for ‘trustworthy’ AI sounds reasonable enough, but reveals a glaring flaw in Brussels’ strategy: a crude, simplistic separation between low and high-risk applications.

At the moment, the EU’s proposals betray an unjustified anxiety about the risks of so-called deep learning (DL), the algorithmic approach responsible for a lot of recent AI progress.

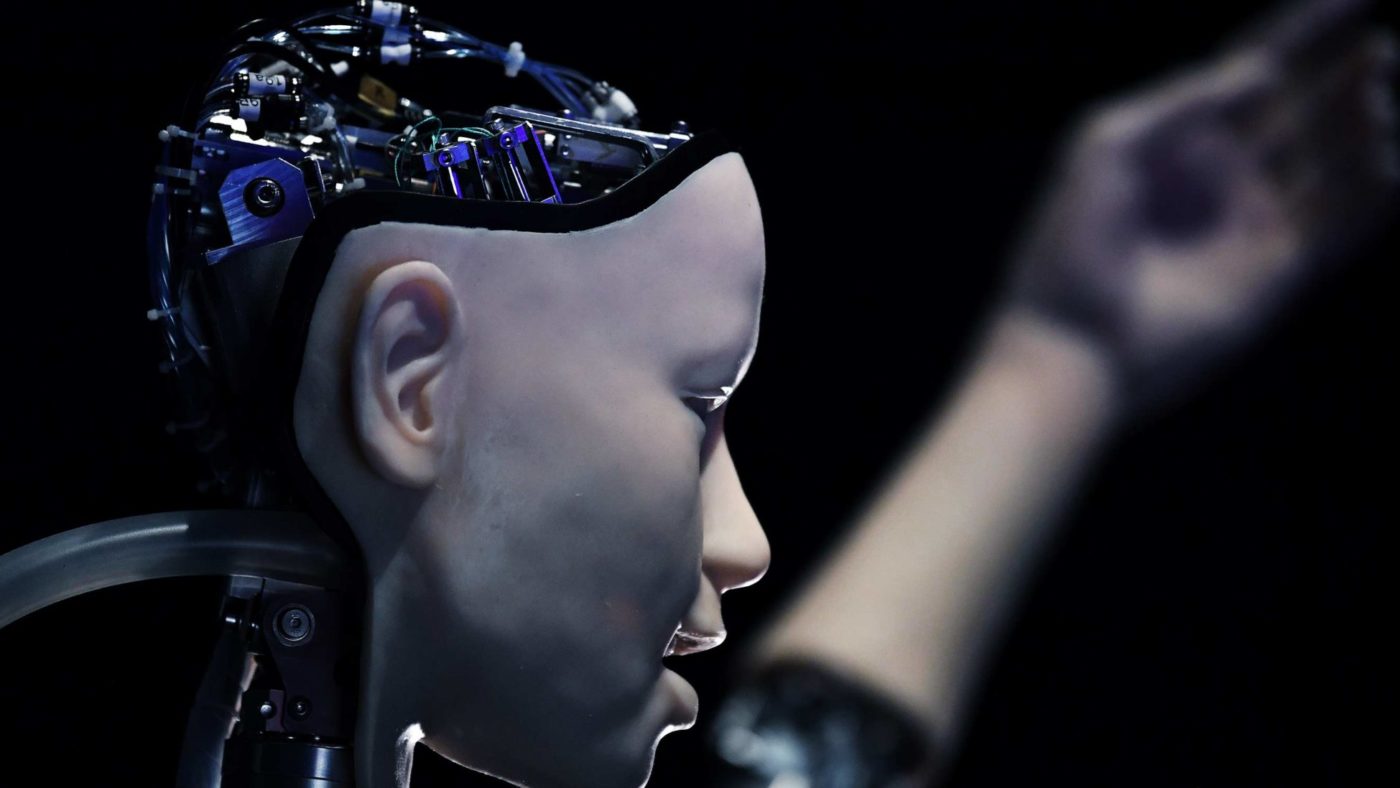

Simply put, deep learning works by extracting complicated features from input data, such as different parts of an image, in order to achieve a given objective like labelling an image. It uses a statistical method called “backpropagation” to find errors in its calculations and minimise future errors between its prediction and the true result.

It’s a promising technology, which is why, since 2012, developing deep learning has been at the heart of AI innovation. But the EU isn’t having any of that. Instead, it wants to restrict the use of deep learning in areas deemed ‘high risk’, such as medicine an transport – areas in which deep learning has huge potential for positive change.

Their logic is that modern statistical approaches to AI are often “black boxes” which prevent outside observers from fully understanding the algorithm’s decision-making process. While it is true that current AI’s are often inscrutable, this doesn’t prevent them from being tremendously helpful in “high risk” applications. In medicine, for example, AI can be a valuable support tool, providing information about treatment procedures or hospital resource allocations, with doctors still able to apply their own domain knowledge, using their expertise to make a judgement call on an algorithm’s decisions.

Despite the current closed nature of AI systems, areas such as causality research, algorithmic fairness and interpretability research are attracting some of the best and brightest in the field. Where this research pays off depends to a great extent on the freedom provided to researchers to develop and test their ideas.

And this is an area in which the UK is well placed to prosper. The NHS has made a name for itself globally in its willingness to partner with AI firms to deliver better quality treatment. For instance, it has teamed up with DeepMind, a UK-based AI firm, to improve the identification of Acute Kidney Injury, a cause of many preventable deaths. Another British firm, Babylon Health, has partnered with NHS practices to provide digital diagnostics to patients, again alleviating the strain on an overburdened system. These are just the kind of exciting initiatives that the EU;s policies would try to limit.

As it sets a new course outside the bloc, the UK ought to ramp up its support for the development and deployment of AI in sectors where it can deliver the greatest benefit. In this, it would need to distinguish itself from the EU’s direct regulation of technologies and focus on minimising the risks from use.

A report from the Committee on Standards in Public Life issued in February on public sector adoption of AI highlights this regulatory principle. Whereas the EU has shown anxieties about the technology itself, explicitly pronouncing its preference for an outdated AI approach known as “symbolic systems”, the British committee’s report instead emphasises the oversight needed to ensure new technology earns public trust.

This question of trust is less about the tech itself and more about the people building and using it. Restricting AI’s application or supporting only certain technological standards does little to actually make AI reliable. But creating responsible protocols for reporting mistakes made by algorithms, engaging the public in data collection efforts, and consulting multiple stakeholders to maximise the benefits of the tech can all help.

Canada provides a good model here: the Pan-Canadian Artificial Intelligence Strategy, which has led globally in supporting core research, commercialisation, and the enforcement of ethical standards in AI. The UK would do well to takes its cues from the Canadians in this area.

At the end of the day, rather than fearmongering over the potential dangers of technology, governments should encourage new applications while developing the institutions that ensure they are used responsibly.

Happily, the UK already seems to have realised this, with the creation of an Office for Artificial Intelligence and those successful AI partnerships with the NHS. Now the task is to build those initiatives into a robust, comprehensive AI strategy which embraces innovation while maintaining public confidence.

Should it succeed, the UK will have taken advantage of one of the great opportunities of Brexit – and offered a stark contrast to the EU’s excessively cautious approach.

Click here to subscribe to our daily briefing – the best pieces from CapX and across the web.

CapX depends on the generosity of its readers. If you value what we do, please consider making a donation.